I hope to answer your questions with these few clarifications.

I developed my own DRL (Deep Reinforcement Learning) code in Haxe langage with the objectiv to encapsulate them into logic nodes and traits in Armory, and be able to test different kind of algorithms and architecture for the DRL or NN parts as well as the different sensors simulated that we can actually use in an industrial environment to define each state during a NN training.

As I shown in different posts, firts results are ok. Main issue is a huge quantity of nodes (existing or new) and traits used to make an application like the “tank” or “robotic arm” one.

Thus actually I consider to redesign the software “de fond en comble” for distributing RL components in a composable way by using an ECS-like approach (Entity Component System), with the objectiv too to get independant threadable parts and to have the possibilty to develop a new concept of “Dynamic of a Layer in a Neural Network”, which defines a form of evolution over time of the NN Layer, like what we make with a target in a software for a radar system.

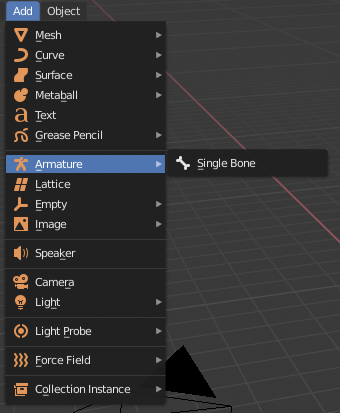

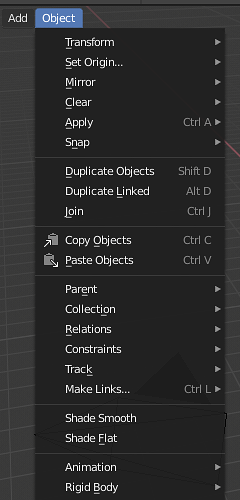

First main task is to get a kind of top-down hierarchical control as I found they already realize in the Bonsai solution. For me I think that the simplest way seems today possible by reusing the capabilities of linked 3D objects and parenting in Armory combined with a kind of ECS design approach in order to encapsulate resource requirements within short-running compute tasks with their own independant data, that will facilitate then some usage of a GPU or CPU cluster during the training of “brains” for sub-systems or during the gameplay of trained “brains” for the whole system.

I think to use this possible clusterisation of the brain training for example to get the best parameters for a NN, given a sub-system brain / training environment used/… , thus making a kind of Genetic Algorithm that will allow to get a NN that will perform properly according to a sub-system where the brain belongs to.

Thus the code is always in evolution/redisign and includes special “bidouilles” to obtain results and performances with Haxe/logic nodes/traits, thus this largely differ from the well-made generalist solutions found in libraries such as Tensorflow, but allow for me to explore, get a better understanding of DRL algoritms we find today in the research field, a very active area, and test new ways of using them inside innovativ software design, thanks to what is offered to us using the promising 3D Armory environment.

My biggest concern is the lack of interest right now of its creator Lubos to share with the community, with some people ready to help him for some support on Armory but now who are beginning to tire of deafening silences to their proposals. It’s worrying for the future.

( 500ms / 25predicts)!

( 500ms / 25predicts)! )

)

.

. LogicNode it’s not the big deal. And there are a lot of ressources to learn Keras/Tensorflow.

LogicNode it’s not the big deal. And there are a lot of ressources to learn Keras/Tensorflow.