Continuing the discussion from Procedural animation and Motion matching:

I think it would be great if we could get some kind of motion capture system into Blender that we could use for our Armory games. I’ve recently found some Machine Learning based solutions for recognizing human poses from single images or videos, which could make for an extremely economic solution for motion capture without expensive equipment or setup.

- TensorFlow.js demo ( from TensorFlow.js website )

-

OpenPose – And somebody is working on a blender plugin: motion-capture-in-blender-via-openpose ( not sure if it works

)

)

Both of these could be a potential candidate for creating a Blender integration for motion capture. OpenPose definitely seems to be more robust, but TensorFlow.js is easier to get started with and it might be easier to hack and tweak because of that. To bulid a 3D representation of a pose you probably need multiple images, but you might also be able to leverage the 2D pose to provide an estimated 3D pose ( I don’t know if that is possible or not ).

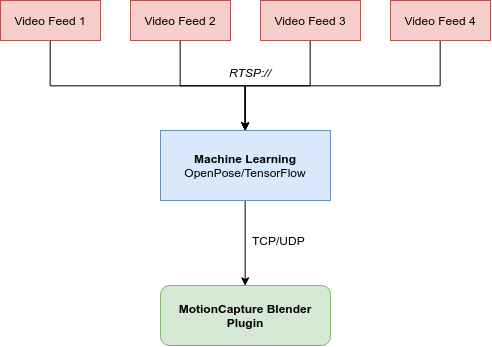

Here is the basic architecture I was thinking:

)

)