HDR Lightmaps [Addon]

Overview

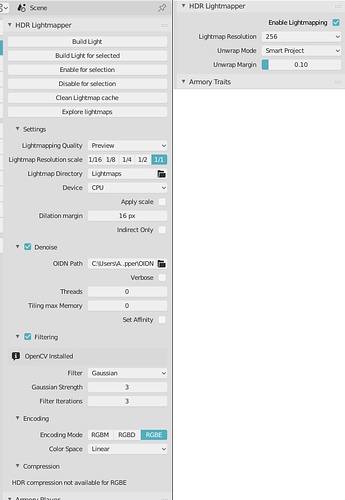

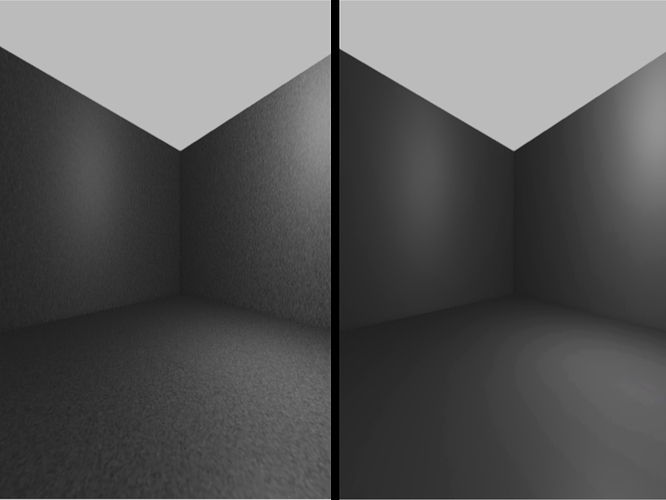

I’ve recently begun working on trying to bring Lightmapping into Armory using Cycles for the Global Illumination. So far I’ve gotten some quite nice results. The lightmapper uses the armory baketool interface, and includes a great bundled denoiser from intel, postprocessing tools and HDR encoding.

What is HDR lightmapping and why use it?

Lightmaps are essentially textures that holds information about the surface luminance of objects, and have been used in games for a long time, as they’re cheap and easy to work with. Defining a scene with ordinary dynamic lights usually has two downsides: Reguler shadow casting lights are still rather expensive on the GPU even on deferred, and especially on mobile devices, and they don’t bounce the light around in an environment, meaning they only provide direct lighting information unless you provide expensive secondary means of doing this (such as Voxel GI or SSDO).

With HDR Lightmaps, you can potentially have unlimited lights in your scene as these are baked into your textures not to mention they’re much less expensive than dynamic lights. The difference between reguler lightmaps and HDR lightmaps is the larger range - Where normal lightmaps have a range between 0 to 1, HDR maps can act as light sources with values from 0 to 6 (gamma space), or 6^2.2 in linear space.

Researching on how other engines does it

If we compare it to the other “main” game engines on the market, they all employ similar means of lightmapping, but with different encoding schemes and different ways of calculating the GI.

In Unity, they recently shifted from primarily using Enlighten (a semi-realtime radiosity solution; I never got it to work right) to using their own Progressive Lightmapper (PLM). The PLM is a CPU (and GPU WIP) based path-tracing (similar to Cycles) lightmapping tool, which progressively renders out a lightmap and filters it using either gaussian filtering or A-trous filtering. The result is then encoded either as dLDR, RGBM or stored in BC6H (able to use EXR files). There’s also the possibility of additional light information maps for storing dominant light direction and specularity.

In Unreal Engine, a tool called Lightmass is used to calculate the global illumination, but unlike PLM which uses path tracing, Lightmass uses Photon Mapping to calculate the GI. Photon Mapping is faster when it comes to interior scenes, needs less filtering (it becomes splotchy rather than noisy) and faster with caustics (although this is unsupported in UE4). The lightmaps are directional similar to Unity’s, with per-pixel normal stored with the lightmap, ending with two sample lightmaps, not entirely sure but it looks like a variation of LogLuv encoding.

Frostbite primarily use something called Flux, which is a path-tracer, that I suppose is somewhat similar to what Unity uses. They mention post-process denoising, but I’m not sure if they mean actual ML denoising, such as then one implemented in this addon. They seem to have a specific workflow, where rough proxies are used for lightmaps, and the lightmaps from these proxies are then projected to the underlying geometry. I suppose the pro’s of doing this could be related to less hassle with seams. In any case, how this happens, I don’t know, but the presentation is worth a read. I might be able to make something similar, but I’ll have to look into how the datatransfer modifier works exactly.

CryEngine and Unigine doesn’t use lightmapping, they seem to use some kind of Voxel GI.

How is this different than what the Armory baketool does?

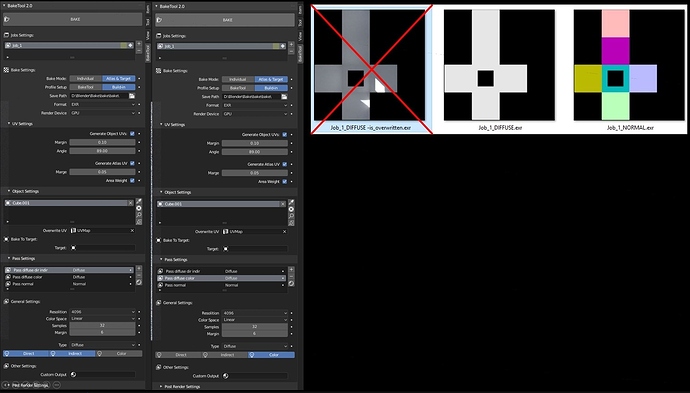

The Armory baketool currently only bakes “complete maps”, which is a full flattened 8-bit map for diffuse map, for the same reason the lightmaps primarily work with the “lightmap” renderpath preset, which has a limited shading suite, and lack of dynamic light - Similar to old games Quake with pre-baked 8-bit diffuse maps with both lights and shadows, just with higher quality.

With HDR Lightmaps, there’s support for PBR materials and shading with deferred lighting mode. Think of it in this way, by default everything is black like in reality, where the lighting adds up from 0 to the specific luminance. In this way, instead of being a flat 8-bit map, a high dynamic range is introduced for each lightmapped object. In order to conserve memory, the baked 32-bit HDR maps are encoded into 8-bit RGBM/RGBD lightmaps, where the alpha channel acts as an hdr component. Decoding is done through pre-made shader nodegroups.

Other pro’s of using lightmaps is that ordinary lights still works and are able to cast light and shadows unto objects with lightmaps. Also, as the lightmaps are baked, these can be reused for different materials and textures

What about noise? Isn’t that still a problem?

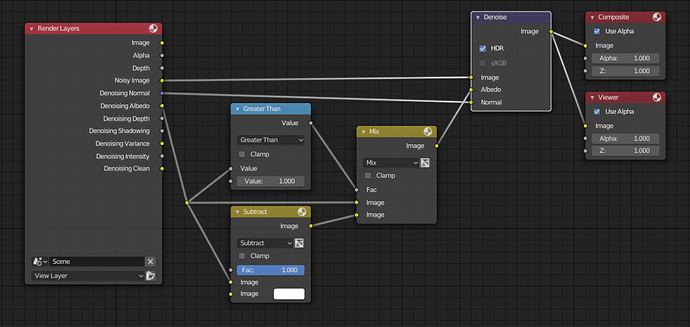

No, this is another part of the addon - First it denoises the lightmaps using Intels Open Image denoiser (Bundled), which uses deep-machine learning to filter out noise made specifically with path tracers using Monte-Carlo ray-tracing methods (which cycles does). The denoising is fast (faster than the native blender denoiser) and effective, only downside is that the images needs to be converted to .pfm files first - Blender doesn’t support that format, but it’s handled by the addon. Afterwards, theres the option for GIMP-based post-processing might be useful, as not all noise might be removed (especially if very few samples are used). Additional blur filtering and despeckle filters helps on that, and all of it is handled by the addon, you only need to provide a path to your GIMP binary folder. (NOT YET IMPLEMENTED / READY)

How does it work?

It works by building a lightmap, technically it’s a diffuse map, but with only the light contribution baked out either as indirect, direct or combined. This map is baked as a 32-bit float map for each object, and then converted to a .pfm format, in order to be able to denoise it. Denoising happens with Intels denoiser and takes a few seconds. It is then imported back into Armory, encoding into RGBM for compatibility reasons, and automatically applied with a decoding node setup. This setup automatically sets itself up according to your materials. Depending on your configuration, your encoded file might go on a trip into your local GIMP installation for further postprocessing.

How do I actually use it?

! I’ll make a video tutorial sometime next week, if I can edit posts after that long time on !

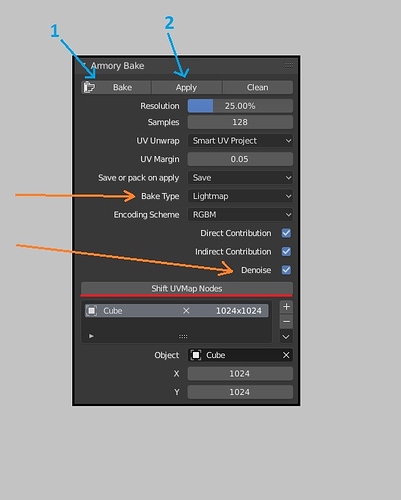

To install: Just copy or clone it into your armsdk folder. Open Armory, scroll down to your “Armory Bake” tab, and set bake type to “Lightmap”.

Set your UV-margin to something like 0.05 to give your packing a little space, depending on your lightmap size, and filtering options.

Also, note for now it is recommended to choose the option “Save” on apply, as packing it into the .blend file seems to cause crashes with the current Blender beta version.

When you bake, 32-bit float maps will be baked and saved into your “/Lightmaps” folder if the option is chosen.

When you click Apply afterwards, these maps will be saved and denoised. When they are denoised, a node-setup will be applied automatically to your material setups, and applied to your basecolor.

By default, the lightmaps might seem a bit weak compared to Cycles/Eevee due to different color management, but it can be adjusted by:

-

Try messing around with the Armory tonemappers

-

Crank up the exposure value (Look under “Film” in Cycles)

-

Try out the latest Postprocess settings (Addon here: https://github.com/Naxela/PPM) - It opens up for node settings, such as exposure, gamma, gain, etc. which can help in terms of that.

I’ve provided a function to easily shift all uv maps, so it toggles between Eevee view and Armory view, as Armory for some reasons shifts the UV index on baked maps.

Future / Todo

The current version is very early work in progress, and there’s still a lot to do but you can still use it as it currently is if you want.

-

Distributed network rendering for baking lightmaps - Maybe look into crowdrender blender plugin

-

Add the possibility of using normal and albedo maps, for better/faster denoising

-

Add separable direct and indirect contribution

-

Lightmapper Tools palette

-

Lightmap to proxy, baking and projection

-

Investigate LogLuv encoding

-

Add manual encoding range

-

More tutorials and tips

-

Step-based configuration interface

-

Quality presets

-

Compression

-

Lightmap conditional sets and blending

-

Directional lightmaps

-

Atlas packing

-

Texel density measurements

-

SciPy processing (alternative to GIMP)

Gallery

Tips and tricks

-

It works best in well lit environments - if you want dim/dark environments, it’s better to bake it as bright, and then decrease the light levels through exposure values to better fill the range.

-

Before you apply/denoise, make sure you turn your displays to “Wireframe” or “Solid” mode, as it seems to crash otherwise once the textures are applied (Due to the Blender Eevee version that Armory 0.6 comes bundled with)

-

If your results look weird, it MIGHT help to:

-

Change your world/environment settings

-

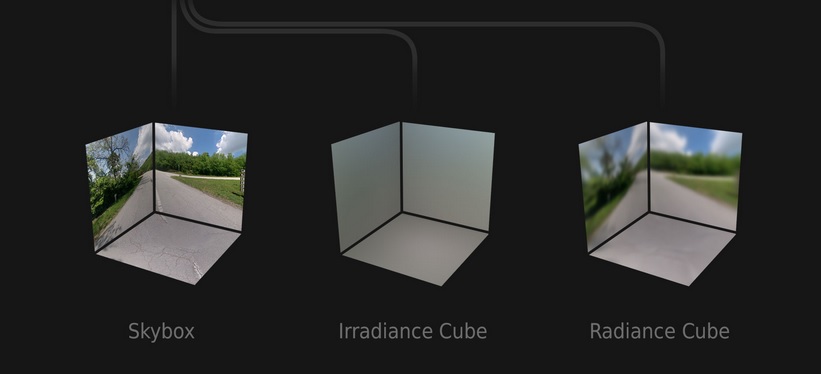

OR turn of “Irradiance” and “Radiance” probes under the renderpath world settings, maybe due to spherical harmonics being applied to the baked result.

-

Use RGBM encoding for most of your stuff, although RGBD might look better with highlights in rare cases.

-

Don’t use underscores for material or object names, it breaks the bake-tool! Use dots or lines instead

-

Due to the compression of light values, interior values can really be a pain but it’s possible to get good results with even large ranges

-

Controlling the lightmap properties with a brightness/contrast node can be useful

Download

Get the latest copy from github here:

Keep in mind, it’s early WIP and might not work and/or require a little bit of messing around with settings!

Post-Process Module (PPM) Addon

PPM is another addon for armory, that introduces post processing as nodes - It’s still currently in an early stage, but should be usable. Like the HDR Lightmap addon, it’s only available for Armory 0.6 so far - It’s incompatible 2019.5 (Update is in progress). - I can post another thread with more info for this addon too, if people want that?

Always cool to see what you put together.

Always cool to see what you put together.